This is the first paper of the paper reading series. I have been following the morning paper for a long time. Sadly in recent post, it is decided the morning paper is being paused and we don’t know when it will come back. Well I thought I would love to keep track of papers I am interested in so that later on I can come back and dig deeper with a specific interest. While waiting for the morning paper to come back (hopefully), I would start updating the papers here. The frequency would be one paper per week (hopefully), and in principal it will continue even after the morning paper is resumed for the purpose of personal anchor of papers of interests.

This week’s paper is in OSDI’20 from Haibo Chen’s group in Jiaotong University. Yet another Key-Value Store paper, but uses Machine Learning techniques to reduce the caching size.

Motivation

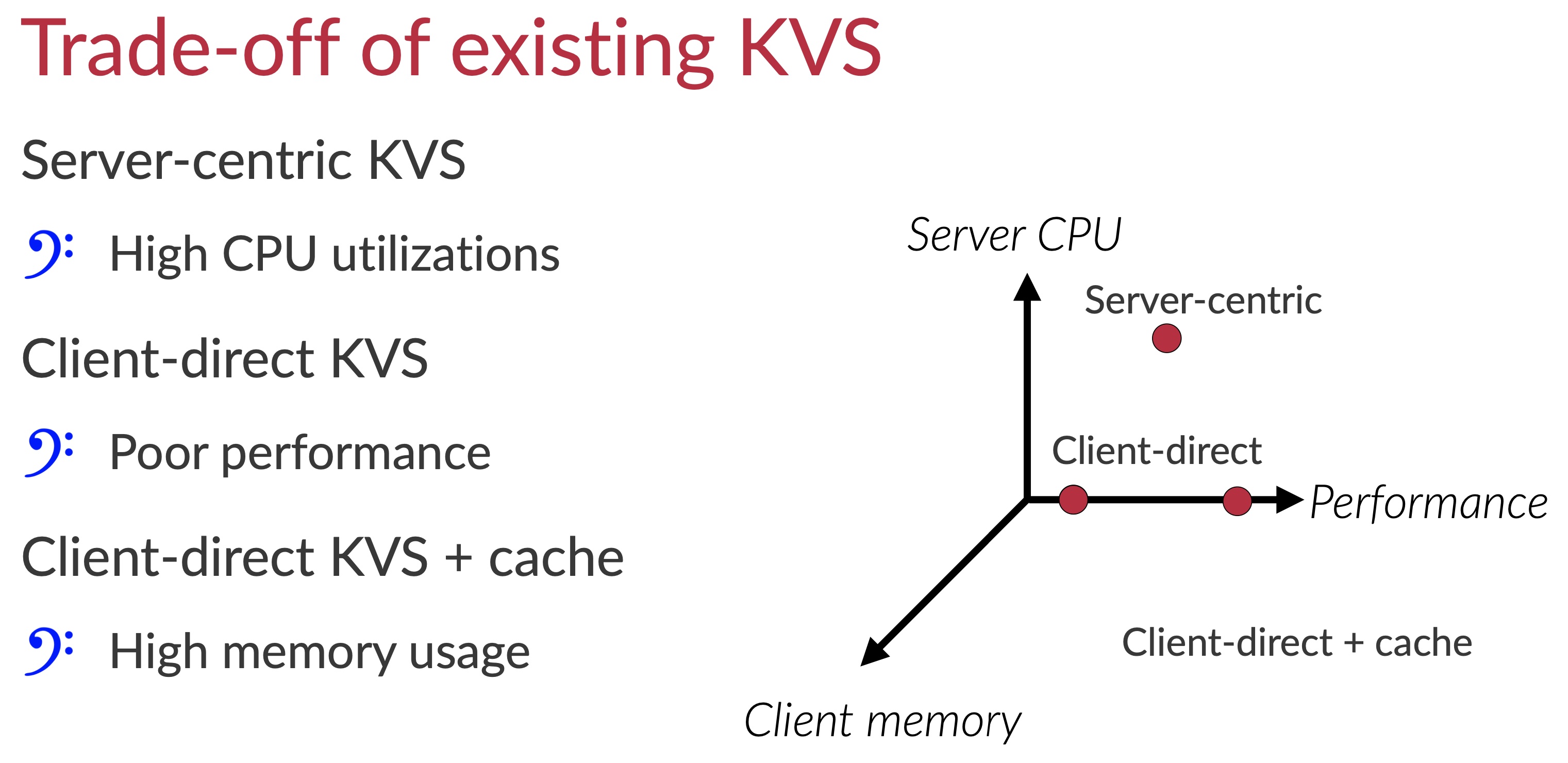

- Server-centric design consumes a lot of server-side CPU resources.

- CPU speed grows slower than network speed, thus becomes a performance bottleneck.

- CPU cache is constantly poluted by random access the data preserved in B+ tree (KV Store data structure).

- Client-direct design uses client-side NIC to directly read data from server through single-sided RDMA.

- Good: Bypass CPU bottleneck.

- Bad: Limited operations (read/write) makes traversing a B+ tree multiple round trips.

- Client-side caching of the B+ tree.

- Good: No need to do remote access, and use client-side CPU to traverse the B+ tree.

- Bad: Consumes too much memory.

- Bad: If there’s a cache miss, client has to get the whole tree from the server.

Design (XStore)

- Hybrid operations

- Server-centric for data write because NIC cannot handle complex tree insert/update.

- Client-direct get/scan to server hashtable index.

- Client-side Learned Cache.

- Learned Cache

- Enlightened from learned index @SIGMOD’18.

- At the server side, learn a model of the mapping of

Key = B+ address. Then send this model (Linear Regression) to client. - Learned model is usually very small.

- The model gives a range of addresses given a key -> 100% guarantee that the key is within these addresses.

- Two round trips to get a key

- Given the addresses, get all keys with the address -> determine which address is the actual one.

- Go the address and fetch the value.

- Challenge 1: learned index assumes the addresses are sorted -> not in B+ tree

- Make a logical address to leaf nodes that are sorted.

- Trasition table that maps

logical address = physical address. - Trasition table is also cached in client-side.

- Challenge 2: KV Store is dynamically changing due to updates

- Retrain the model after update -> invalidate client mode -> client can fetch the model on-demand.

- Client can retrive partial model as the model is partitioned.

Evaluation

- Testbed: 16-node cluster (24 cores), 2 ConnectX-4 RDMA (one node is server, rest is clients)

- Workload: YCSB

- Highlight:

- The memory footprint is very small (16B size for the model). 150MB vs 600MB

Comment

This is a paper that adopts an existing idea (learned index) in the KV Store and solves the problem of too much memory consumption in client side. I haven’t read the learned index paper and not sure where was that idea originally used (maybe database?). It’s a good adoption and well executed paper.